Why Explainability Is the First Real Test of Context Engineering

AI evaluates your product before people do. Builder PMs design systems that hold up without them in the room

John was smiling as he finished his latest product briefing with a potential customer. The conversation was full of “what-if” questions. Strong buying signals for John.

Later that day, he notices a competitor announcing yet another customer win. They must have shipped new features, he thinks. John asks AI for a list of the competitor’s recent features.

Answer: Nothing new in the past year.

Puzzled, John asks a different question:

What differentiates the competitor’s product from ours?

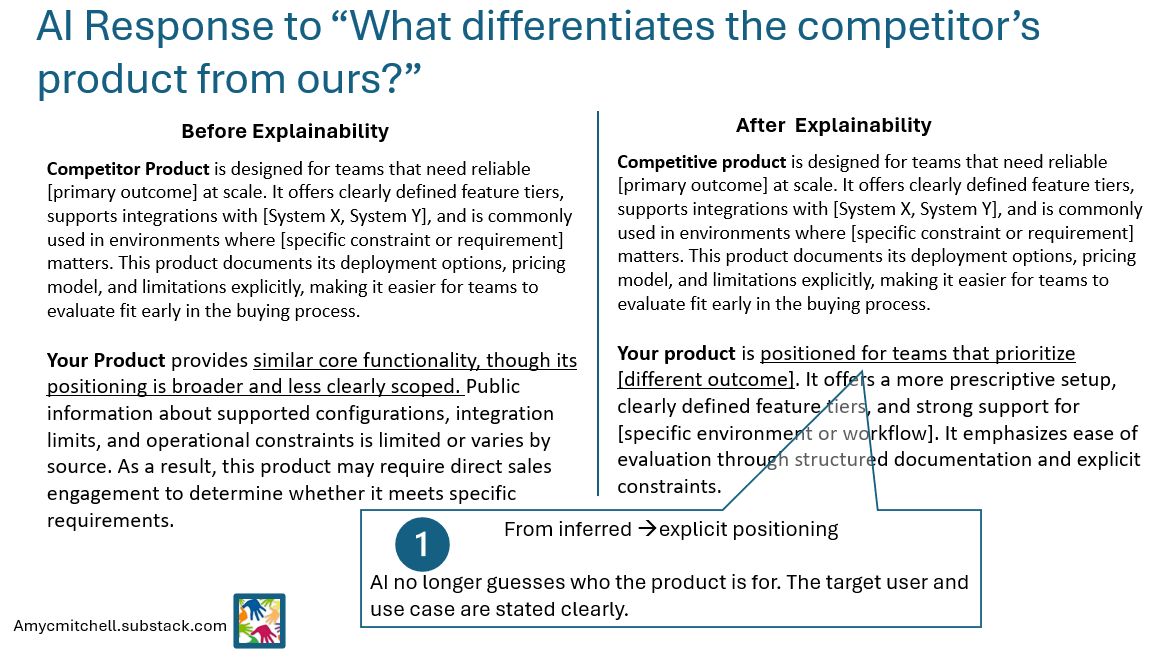

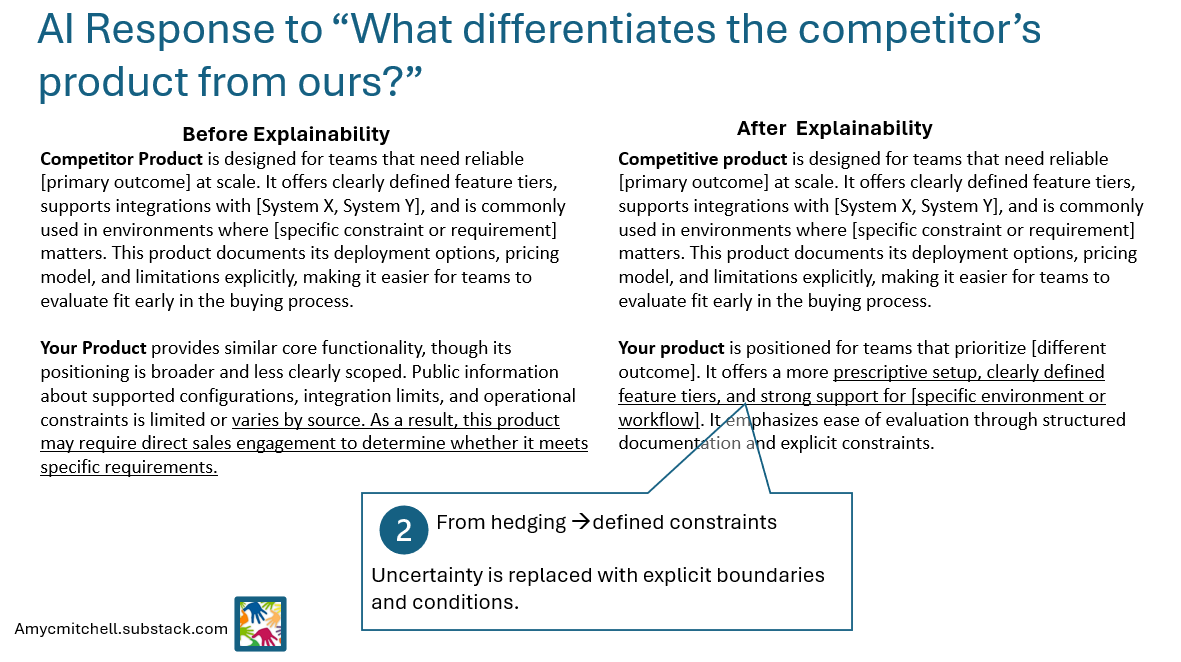

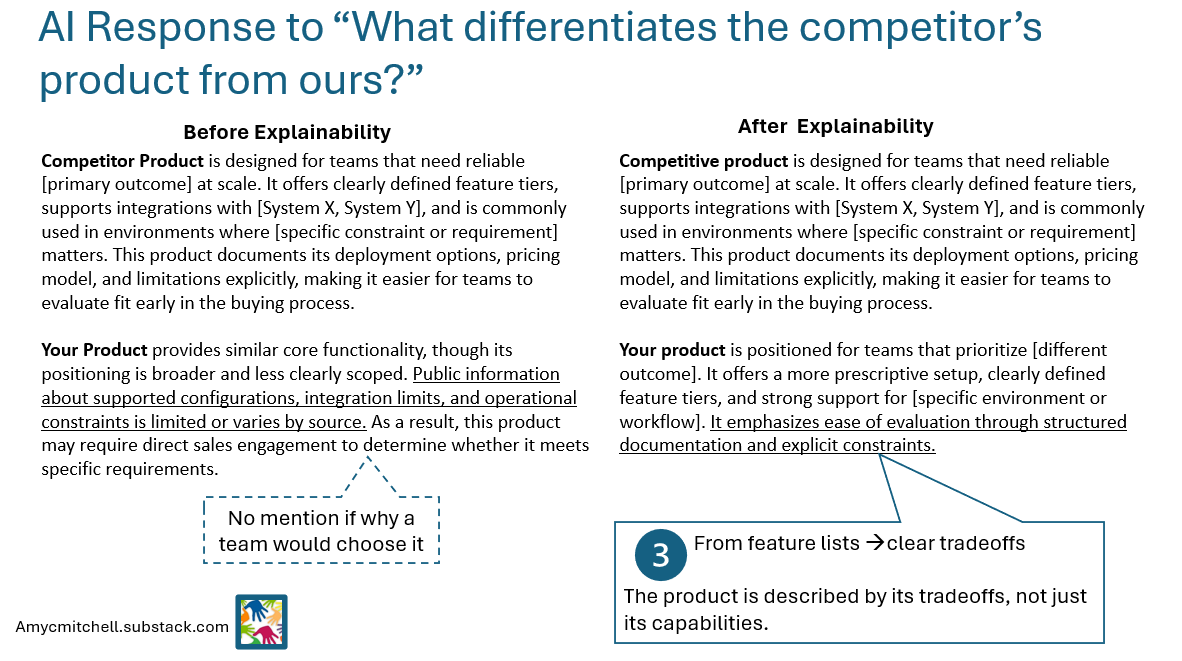

Here’s what AI says:

Competitor Product is designed for teams that need reliable [primary outcome] at scale. It offers clearly defined feature tiers, supports integrations with [System X, System Y], and is commonly used in environments where [specific constraint or requirement] matters. This product documents its deployment options, pricing model, and limitations explicitly, making it easier for teams to evaluate fit early in the buying process.

John’s Product provides similar core functionality, though its positioning is broader and less clearly scoped. Public information about supported configurations, integration limits, and operational constraints is limited or varies by source. As a result, this product may require direct sales engagement to determine whether it meets specific requirements.

John recognizes the problem immediately. His request to improve product information for AI has never been started.

He thought the requirement was straightforward:

Clear product positioning

Business context and use cases

Explicit constraints on scaling up and scaling out

Differentiators called out

Outdated terminology refreshed

But the requirement sits in the backlog because:

No one owns the crossover between technical detail and business impact

John’s instinct wasn’t wrong. But product explainability is new—and it demands structure in a way most product teams weren’t designed for.

Explainability Isn’t Documentation. It’s Product Representation at Scale

Two things blocked John’s request:

Product explainability is so new that no one knows how to measure it

Product explainability is a “forever feature” that never finishes

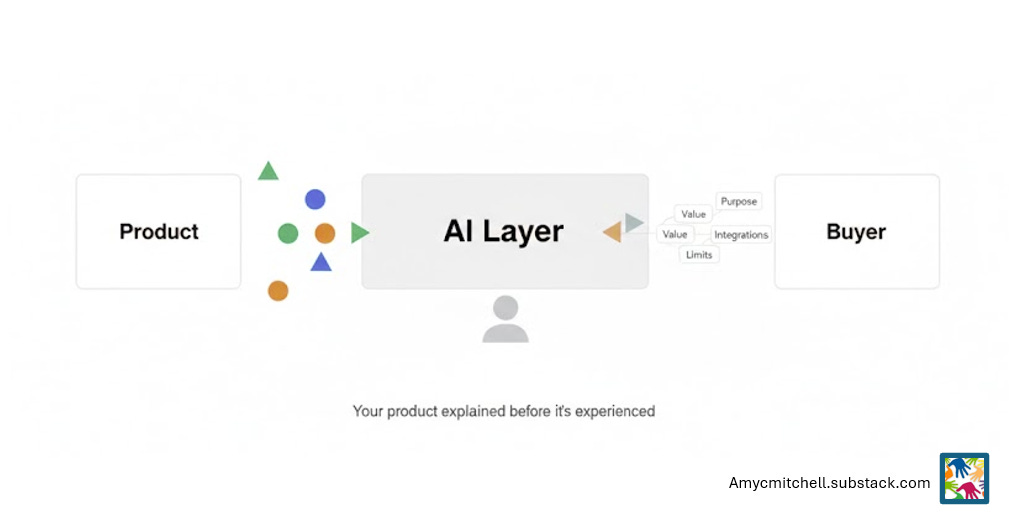

Product explainability is how your product is represented when you’re not in the room.

AI doesn’t understand intent. It assembles answers from what exists and uses your product information to explain your product to potential customers.

If your product can’t explain itself, AI will do it with partial context.

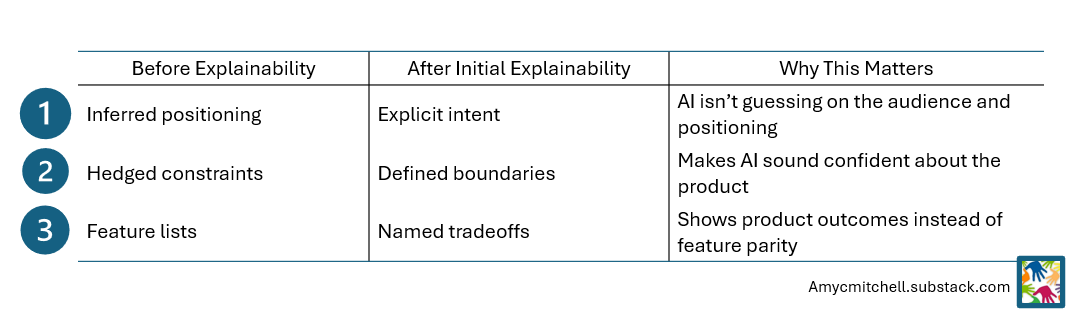

Here’s what product representation looks like before and after explainability.

Why This Feels Hard (and Why It’s New)

Before AI, structured product information mattered less. Buyers could contact sales or consult experts. Product managers could join conversations to clarify details.

Product managers who could bridge product, business, and ordering logic handled most questions live.

With AI in the mix, product information becomes the basis for first impressions. AI is consulted before sales and before product managers. The conversation gap is exposed.

When product constraints aren’t explicit, AI fills the gaps.

Builder PMs Treat Explainability as a System

Builder PMs understand how the product work actually gets done across roles. They’re fluent enough to ask better questions and surface evidence earlier.

Like John, builder PMs bridge product, business, and ordering knowledge and push that knowledge into the open so AI can find it.

John didn’t ask for “more documentation.” He knew that anything AI consumes must have structure, currency, and consistency.

The scope felt overwhelming because explainability isn’t a one-time deliverable. It’s a system.

A product explainability system has three elements:

A single source of truth for product knowledge

A clear structure for that knowledge

Triggers that keep it updated

The question isn’t whether this system is valuable—it’s how to start.

Builder PMs don’t just surface features; they surface tradeoffs.

Start with a Small Explainability Experiment

Before creating new requirements, gather what already exists. Most product managers know where to find:

Core marketing materials

Key sales decks

Engineering white papers

Ordering guides with critical details

Start using this material as product context. You’ll quickly notice gaps—missing FAQs, unclear positioning, outdated constraints. Each gap becomes a small, finite requirement.

Instead of pushing one massive initiative, you end up with smaller explainability tasks that can be distributed to the right experts.

This step alone increases usage of product context by both AI and people. The next challenge is keeping it current.

If You Only Build One Explainability Item

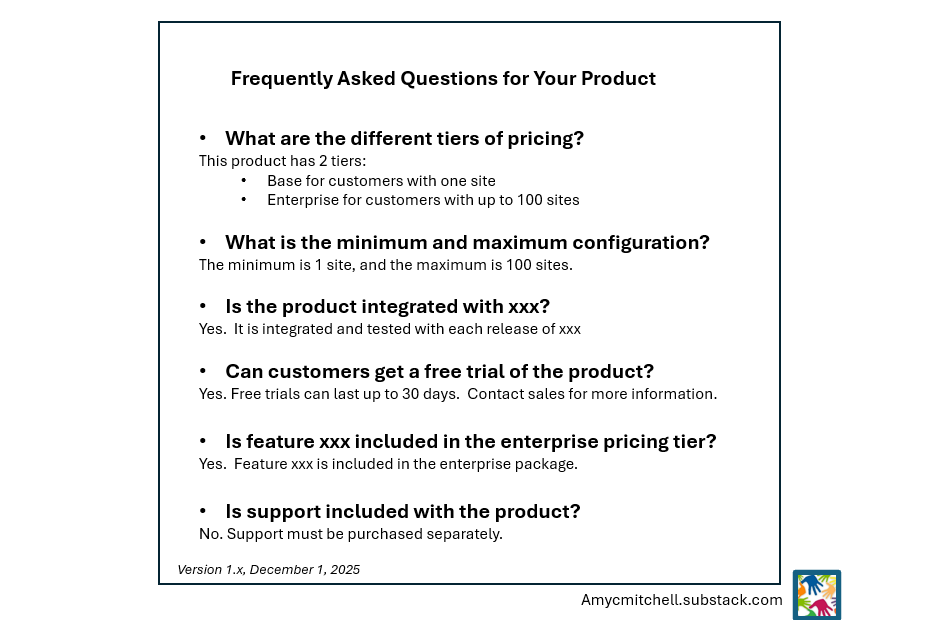

If you build just one thing, make it a Product Ordering FAQ.

This is where explainability pays off fastest:

What feature combinations are supported or unsupported?

What scales cleanly—and what requires validation?

When should sales or product be involved?

What tradeoffs should buyers expect?

This knowledge already exists. It’s scattered across decks, Slack threads, and people’s heads. The experiment is making it visible, structured, and current.

When this FAQ exists:

AI stops guessing

Sales stops escalating

Product managers stop re-explaining

Don’t Automate What You Can’t Yet Trust

As teams begin using the experimental product context, structure emerges naturally—around releases, features, and use cases.

Builder PMs encourage the whole team to contribute. Over time, frequently used materials are consolidated into a version-controlled product context.

This context shows its value when:

Updating it becomes complex

Version collisions hit the critical path

That’s when automation becomes worth the effort.

Early Signals That Explainability Is Working

Explainability is hard to measure because it’s new and AI-driven. Usage by AI and customers can feel invisible.

But early signals show the team is unstuck:

Sales consults the product knowledge base before contacting product managers

Customers arrive informed before speaking to sales

AI responses reflect current product reality

Back to John

John’s explainability requirement never left the backlog.

Instead of waiting for new ownership models or tooling, he shifted into builder PM mode. He started with customer slide decks and marketing materials. The team added an FAQ and ordering guidelines.

When John rechecked AI comparisons, the difference was clear:

Competitive product is designed for teams that need reliable [primary outcome] at scale. It offers clearly defined feature tiers, supports integrations with [System X, System Y], and is commonly used in environments where [specific constraint or requirement] matters. This product documents its deployment options, pricing model, and limitations explicitly, making it easier for teams to evaluate fit early in the buying process.

John’s product is optimized for teams that prioritize [different outcome]. It offers a more prescriptive setup, clearly defined feature tiers, and strong support for [specific environment or workflow]. It emphasizes ease of evaluation through structured documentation and explicit constraints.

Conclusion: Explainability Is Where Context Engineering Becomes Real

Explainability is where context engineering stops being conceptual.

Builder PMs don’t wait for perfect ownership or tooling. They design small systems that keep product knowledge accurate as the product scales. And they let AI work with that truth instead of filling in the gaps.

Related Articles:

Looking for more practical tips to develop your product management skills?

Product Manager Resources from Product Management IRL

Product Management FAQ Answers to frequently asked product management questions

Premium Product Manager Resources (paid only) 4 learning paths, 6 product management templates and 7 quick starts. New items monthly!

Connect with Amy on LinkedIn, Threads, Instagram, and Bluesky for product management insights daily.

Love this topic. Customer buying journey is changing. Instead of Google, people are now starting with AI. Unlike Google they are having a conversation about your product without you even knowing it. This is why explainability is so important. Thanks Amy